Building Semantic Kernel Agents with Model Context Protocol (MCP) Plugins in Python

Effective AI systems require more than just conversational abilities—they need the capacity to perform actions and access external resources. This guide explores the integration of Microsoft's Semantic Kernel (SK) with the Model Context Protocol (MCP) to develop capable AI agents that interact with external systems. By combining SK's orchestration framework with MCP's standardized interface, developers can create versatile AI applications that leverage both internal and external tools. This approach is valuable for building enterprise-grade solutions, from personal productivity assistants that manage schedules to business applications that interface with company APIs. This guide will walk you through the implementation process step by step.

What is Semantic Kernel (SK)?

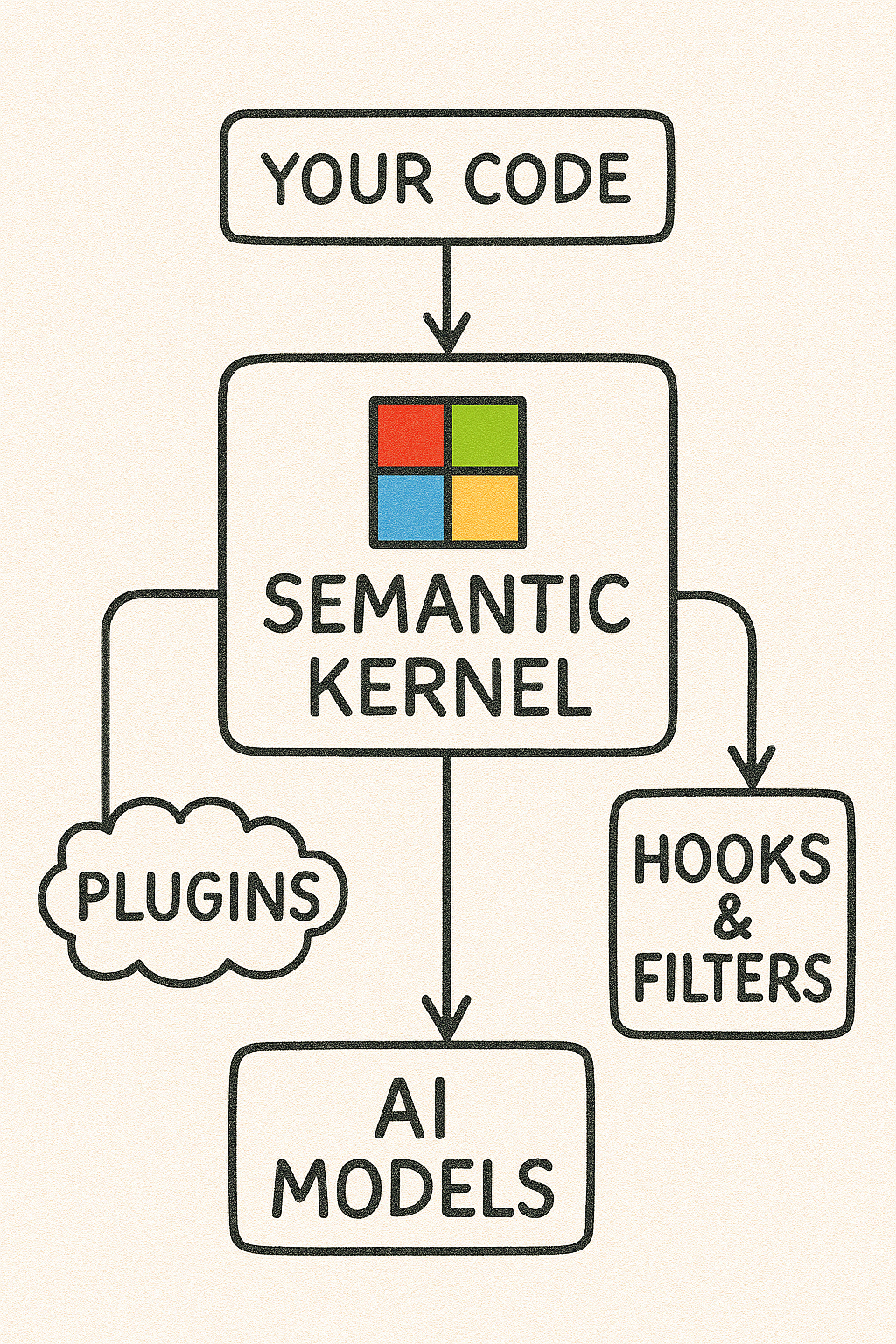

Semantic Kernel (SK) is an open-source SDK and framework from Microsoft for building intelligent AI applications. It provides a model-agnostic orchestration layer that lets developers integrate Large Language Models (LLMs) into their code and create AI agents with ease. In essence, SK acts as middleware between your code and AI models, enabling you to blend natural language prompts with traditional software functions.

Semantic Kernel sits at the center of your application's AI integration. It connects your code (top) and plugins (left) with the latest AI models (bottom), while also supporting hooks & filters for security and monitoring (right). This modular design makes SK future-proof – you can swap in new AI models as they arrive without major code changes. The plugin architecture allows reusing your custom functions or connecting external APIs as "skills" the AI can invoke.

Architecture Basics

SK's architecture revolves around a Kernel object that manages AI model services, memory (for context storage), and skills/plugins. Developers define functions (skills) – which can be native code functions or prompt templates – and register them as plugins. When the AI model needs to perform an action (e.g. retrieve data or execute logic), it can ask SK to invoke the appropriate function. SK translates the model's function-call request into a real code call and returns the result back to the AI model.

This approach lets you automate business processes by having the AI trigger your existing APIs or workflows through natural language. The framework is modular and extensible – you can plug in new functionality easily, and even share or reuse plugins (e.g. via OpenAPI definitions) across different applications.

Use Cases

Semantic Kernel is used to build a variety of AI-driven solutions, from chatbots and Copilot-style assistants to complex multi-agent systems that coordinate specialized AI agents. For example, a developer can create a chatbot that not only answers questions but also calls internal APIs (via plugins) to book meetings or fetch customer data. Enterprises are leveraging SK for its reliability and observability – it supports integration of telemetry, security filters, and stable APIs, making it suitable for large-scale, enterprise-grade AI applications.

What is the Model Context Protocol (MCP)?

Model Context Protocol (MCP) is an open protocol that standardizes how applications provide context and tools to LLMs. Think of MCP as a universal plugin interface for AI models – it defines a way for AI agents to connect to external data sources, tools, or services in a consistent manner. By using MCP, different AI frameworks and applications can interoperate with the same set of tools. This promotes interoperability (tools written for one AI system can be used by another) and gives AI models more flexibility in how they access information.

In practical terms, MCP defines a client-server architecture for AI plugins:

- An MCP Server hosts a collection of "tools" (functions, data retrieval endpoints, etc.) and exposes them via the standardized protocol.

- An MCP Client (typically the AI agent or host app) connects to the server and can request a list of available tools and invoke them as needed.

Benefits

MCP makes it easier to build plugin-based agent systems by providing a common language for tools. It enhances how AI models interface with data and actions, resulting in richer contextual understanding and capabilities. Developers can switch out or upgrade AI models without changing how they connect to tools (since MCP abstracts the connection). Likewise, the same MCP tool server could serve different AI assistants (even across languages or platforms).

Potential applications span many domains – for instance, an MCP server could provide a knowledge base lookup, database queries, or business workflows, all of which any compatible AI agent can call. Overall, MCP brings flexibility (standard connectors for various data sources) and extensibility to AI solutions, much like having a universal "USB-C port" for connecting any tool to your AI agent.

Extending SK Agents with Plugins (and MCP Plugins)

One of Semantic Kernel's core strengths is its plugin ecosystem. In SK, a plugin (sometimes called a "skill") is essentially a collection of functions that the AI agent can call as tools. Plugins can be implemented in different ways – you can write native code functions, create prompt templates, import OpenAPI REST endpoints, or use the Model Context Protocol (MCP) to interface with an external tool server.

This means an SK agent isn't limited to just what's in your local code; it can be extended with powerful external capabilities. When you attach plugins to an SK agent, under the hood Semantic Kernel will expose those functions to the LLM. Modern LLMs like GPT-4 support function calling, which allows the model to ask the host application (SK) to execute a specific function when appropriate.

SK acts as the broker: it presents the available plugin functions to the model, the model decides if/when to invoke them, and then SK carries out the call and returns the result. This mechanism enables tool-using agents – your agent can, for example, decide to call a "Weather API" plugin function to get weather info, or an "Email" plugin to send a message, based on the conversation.

MCP Plugins in SK

An MCP-based plugin allows an SK agent to connect to an external MCP server as one of its tool sources. Essentially, SK uses an MCP client to fetch the list of available tools from the server and dynamically wraps each tool as an SK function. These become part of the agent's skill set. So if you have an MCP server providing, say, financial data tools, your agent can incorporate those and call them just like any other plugin function.

The big advantage is that MCP plugins decouple the tool implementation from the agent – the tools can run in a separate process (even on a different machine or written in a different language), and any MCP-compliant agent can use them. This opens up possibilities like reusing the same tools across multiple AI apps or sharing tools within a company.

Microsoft's Semantic Kernel has built-in support for MCP, so developers can seamlessly register an MCP server as a plugin source. For example, if you already have a set of SK plugins (functions) that you wrote in Python, you might want to expose them via MCP so that other AI agents or platforms could use them. Conversely, if someone has built an MCP server with useful tools (say a database query tool), you can plug it into your SK agent instead of rewriting that functionality.

This interoperability is one of the reasons MCP integration in SK is exciting – it allows AI solutions to compose capabilities across ecosystems. Moreover, by leveraging SK's plugin system, you also inherit SK's features like content filtering and logging. Every tool invocation can be passed through SK's filters (for safety) and be logged/traced via SK's observability hooks, which is important for enterprise scenarios.

Before You Code

Before diving into implementation, let's ensure you have everything needed to run the examples.

Prerequisites

- Python 3.10 or later

- Basic knowledge of Python programming

- An OpenAI API key (for access to models like GPT-4)

- Basic understanding of LLMs and AI concepts

Setting Up Your Environment

First, create a virtual environment and install the required packages:

# Create and activate a virtual environment

python -m venv sk-mcp-env

source sk-mcp-env/bin/activate # On Windows, use: sk-mcp-env\Scripts\activate

# Install required packages

pip install semantic-kernel mcp-python-sdk python-dotenv openai

Configuration

Create a .env file in your project directory with your OpenAI API key:

echo "OPENAI_API_KEY=your-api-key-here" > .env

Replace your-api-key-here with your actual OpenAI API key. This file will be loaded by the examples to authenticate with OpenAI.

With the setup complete, you're ready to start building your first Semantic Kernel agent!

How to Create a Basic Semantic Kernel Agent (Python)

Let's start by building a simple SK agent in Python. This agent will use OpenAI to generate responses using Semantic Kernel's modern Kernel-based approach.

#!/usr/bin/env python3

# Basic Semantic Kernel agent with streaming responses

import asyncio

import os

import pathlib

from dotenv import load_dotenv

from semantic_kernel import Kernel

from semantic_kernel.connectors.ai.open_ai import OpenAIChatCompletion

from semantic_kernel.contents import ChatHistory

from semantic_kernel.contents.streaming_chat_message_content import StreamingChatMessageContent

from semantic_kernel.contents.utils.author_role import AuthorRole

from semantic_kernel.functions import KernelArguments

async def main():

# Load environment variables from .env file

current_dir = pathlib.Path(__file__).parent

env_path = current_dir / ".env"

load_dotenv(dotenv_path=env_path)

# Initialize the kernel - the orchestration layer for our agent

kernel = Kernel()

# Add an OpenAI service

api_key = os.getenv("OPENAI_API_KEY")

if not api_key:

raise ValueError("OPENAI_API_KEY environment variable is not set. Check your .env file.")

print(f"Using API key: {api_key[:5]}...")

model_id = "gpt-4o" # Choose your model

service = OpenAIChatCompletion(ai_model_id=model_id, api_key=api_key)

kernel.add_service(service)

# Create a chat history with system instructions

history = ChatHistory()

history.add_system_message("You are a helpful assistant specialized in explaining concepts.")

# Define a simple streaming chat function

print("Starting chat. Type 'exit' to end the conversation.")

while True:

user_input = input("User:> ")

if user_input.lower() == "exit":

break

# Add the user message to history

history.add_user_message(user_input)

# Create streaming arguments

arguments = KernelArguments(chat_history=history)

# Create a simple chat function (this could also be loaded from a prompt template)

chat_function = kernel.add_function(

plugin_name="chat",

function_name="respond",

prompt="{{$chat_history}}"

)

# Stream the response

print("Assistant:> ", end="", flush=True)

response_chunks = []

async for message in kernel.invoke_stream(

chat_function,

arguments=arguments,

):

chunk = message[0]

if isinstance(chunk, StreamingChatMessageContent) and chunk.role == AuthorRole.ASSISTANT:

print(str(chunk), end="", flush=True)

response_chunks.append(chunk)

print() # New line after response

# Add the full response to history

full_response = "".join(str(chunk) for chunk in response_chunks)

history.add_assistant_message(full_response)

if __name__ == "__main__":

asyncio.run(main())

In this example, we:

- Initialize a Semantic Kernel

Kernelobject - Configure it with OpenAI's chat completion service

- Set up a chat history with a system message to define the assistant's behavior

- Create a simple chat function using the chat history as context

- Stream the assistant's response chunks for a better user experience

- Add the complete response back to the history for context in the next turn

This basic agent doesn't have any plugins or tools attached – it's just the LLM responding with its own knowledge. In the next sections, we'll extend this agent with MCP plugin capabilities.

Creating a Simple MCP Server in Python (MCP via stdio)

Now, let's create a simple MCP server that our agent can use as a plugin. We'll create a calculator tool server that can perform basic math operations.

#!/usr/bin/env python3

# Simple MCP server with a calculator function

from mcp.server.fastmcp import FastMCP

# Instantiate an MCP server instance with a name

mcp = FastMCP("CalculatorServer")

# Define a tool function using a decorator

@mcp.tool()

def add_numbers(x: float, y: float) -> float:

"""Add two numbers and return the result."""

print(f"📝 Calculating: {x} + {y}")

return x + y

# Additional calculator functions to show extensibility

@mcp.tool()

def subtract_numbers(x: float, y: float) -> float:

"""Subtract the second number from the first number."""

print(f"📝 Calculating: {x} - {y}")

return x - y

@mcp.tool()

def multiply_numbers(x: float, y: float) -> float:

"""Multiply two numbers together."""

print(f"📝 Calculating: {x} * {y}")

return x * y

@mcp.tool()

def divide_numbers(x: float, y: float) -> float:

"""Divide the first number by the second number."""

if y == 0:

error_msg = "Cannot divide by zero"

print(f"❌ Error: {error_msg}")

raise ValueError(error_msg)

print(f"📝 Calculating: {x} / {y}")

return x / y

if __name__ == "__main__":

# This server will be launched automatically by the MCP stdio agent

# You don't need to run this file directly - it will be spawned as a subprocess

# Run the MCP server using standard input/output transport

mcp.run(transport="stdio")

Let's break down what's happening:

- We create a FastMCP server named "CalculatorServer". This sets up an MCP server object to which we can attach tool functions.

- We define several Python functions like

add_numbers,subtract_numbers, etc., and decorate them with@mcp.tool(). This tells FastMCP to expose these functions as tools in the MCP protocol. - Each function has a docstring that describes what it does, which will be used to help the LLM understand when to use this tool.

- Finally, we call

mcp.run(transport="stdio")which launches the MCP server using the stdio transport, meaning it will communicate via standard input/output streams.

This server doesn't need to be run directly - it will be launched as a subprocess by our agent in the next section.

Note that we're using the generic mcp.run(transport="stdio") method to specify the transport type. This approach provides consistency across different transport mechanisms.

Integrating the SK Agent with the MCP Server (stdio plugin)

With our MCP server code in place, let's integrate it into our Semantic Kernel agent. We'll use the MCPStdioPlugin to spawn and connect to the calculator server.

#!/usr/bin/env python3

# Semantic Kernel agent with MCP stdio plugin integration

import asyncio

import os

import sys

import pathlib

from dotenv import load_dotenv

from semantic_kernel import Kernel

from semantic_kernel.connectors.ai.open_ai import OpenAIChatCompletion

from semantic_kernel.connectors.ai.open_ai import OpenAIChatPromptExecutionSettings

from semantic_kernel.connectors.ai.function_choice_behavior import FunctionChoiceBehavior

from semantic_kernel.connectors.mcp import MCPStdioPlugin

from semantic_kernel.contents import ChatHistory

from semantic_kernel.contents.streaming_chat_message_content import StreamingChatMessageContent

from semantic_kernel.contents.utils.author_role import AuthorRole

from semantic_kernel.functions import KernelArguments

async def main():

# Load environment variables from .env file

current_dir = pathlib.Path(__file__).parent

env_path = current_dir / ".env"

load_dotenv(dotenv_path=env_path)

# Find the correct path to the MCP server script

mcp_server_path = current_dir / "02_mcp_server.py"

# Make sure the server script exists

if not mcp_server_path.exists():

print(f"Error: MCP server script not found at {mcp_server_path}")

print("Make sure 02_mcp_server.py is in the same directory as this script.")

sys.exit(1)

print("Setting up the Math Assistant with MCP calculator tools...")

# Initialize the kernel

kernel = Kernel()

# Add an OpenAI service with function calling enabled

api_key = os.getenv("OPENAI_API_KEY")

if not api_key:

print("Error: OPENAI_API_KEY environment variable is not set.")

print("Please set it in your .env file or environment variables.")

sys.exit(1)

model_id = "gpt-4o" # Use a model that supports function calling

service = OpenAIChatCompletion(ai_model_id=model_id, api_key=api_key)

kernel.add_service(service)

# Create the completion service request settings

settings = OpenAIChatPromptExecutionSettings()

settings.function_choice_behavior = FunctionChoiceBehavior.Auto()

# Configure and use the MCP plugin for our calculator using async context manager

async with MCPStdioPlugin(

name="CalcServer",

command="python",

args=[str(mcp_server_path)] # Use absolute path to our MCP server script

) as mcp_plugin:

# Register the MCP plugin with the kernel

try:

kernel.add_plugin(mcp_plugin, plugin_name="calculator")

except Exception as e:

print(f"Error: Could not register the MCP plugin: {str(e)}")

sys.exit(1)

# Create a chat history with system instructions

history = ChatHistory()

history.add_system_message(

"You are a math assistant. Use the calculator tools when needed to solve math problems. "

"You have access to add_numbers, subtract_numbers, multiply_numbers, and divide_numbers functions."

)

# Define a simple chat function

chat_function = kernel.add_function(

plugin_name="chat",

function_name="respond",

prompt="{{$chat_history}}"

)

print("\n┌────────────────────────────────────────────┐")

print("│ Math Assistant ready with MCP Calculator │")

print("└────────────────────────────────────────────┘")

print("Type 'exit' to end the conversation.")

print("\nExample questions:")

print("- What is the sum of 3 and 5?")

print("- Can you multiply 6 by 7?")

print("- If I have 20 apples and give away 8, how many do I have left?")

while True:

user_input = input("\nUser:> ")

if user_input.lower() == "exit":

break

# Add the user message to history

history.add_user_message(user_input)

# Prepare arguments with history and settings

arguments = KernelArguments(

chat_history=history,

settings=settings

)

try:

# Stream the response

print("Assistant:> ", end="", flush=True)

response_chunks = []

async for message in kernel.invoke_stream(

chat_function,

arguments=arguments

):

chunk = message[0]

if isinstance(chunk, StreamingChatMessageContent) and chunk.role == AuthorRole.ASSISTANT:

print(str(chunk), end="", flush=True)

response_chunks.append(chunk)

print() # New line after response

# Add the full response to history

full_response = "".join(str(chunk) for chunk in response_chunks)

history.add_assistant_message(full_response)

except Exception as e:

print(f"\nError: {str(e)}")

print("Please try another question.")

if __name__ == "__main__":

try:

asyncio.run(main())

except KeyboardInterrupt:

print("\nExiting the Math Assistant. Goodbye!")

except Exception as e:

print(f"\nError: {str(e)}")

print("The application has encountered a problem and needs to close.")

In this code:

- We load environment variables and find the path to our MCP server script

- We initialize Semantic Kernel and add the OpenAI service

- We set up function calling behavior with

FunctionChoiceBehavior.Auto() - We create an

MCPStdioPluginand connect to it using an async context manager pattern:async with MCPStdioPlugin(...) as mcp_plugin:

# Use the plugin within this context - We register this plugin with the kernel using

kernel.add_plugin() - We create a chat function and configure it with a system message that tells the AI to use the calculator tools

- We handle user input and stream the responses using

kernel.invoke_stream()

When you run this code and ask a math question like "What is the sum of 3 and 5?", here's what happens:

- The LLM sees your question and recognizes it as a math problem

- It decides to use the

add_numberstool to solve it - SK sends this request to the MCP server through the stdio channel

- The MCP server executes the function and returns the result (8)

- SK sends this result back to the LLM, which generates a response like "The sum of 3 and 5 is 8"

This process happens seamlessly, allowing the AI to leverage external tools without needing to implement the math logic itself.

Upgrading to Server-Sent Events (SSE) with FastMCP

While the stdio-based approach works well for local development, in production scenarios you'll often want a more robust communication method. Server-Sent Events (SSE) allows the MCP server to run as a separate service that any number of agents can connect to.

First, let's create the SSE version of our MCP server:

#!/usr/bin/env python3

# MCP server with SSE transport for calculator functions

from mcp.server.fastmcp import FastMCP

# Instantiate an MCP server instance with a name

mcp = FastMCP("CalculatorServerSSE")

# Define a tool function using a decorator

@mcp.tool()

def add_numbers(x: float, y: float) -> float:

"""Add two numbers and return the result."""

print(f"📝 Calculating: {x} + {y}")

return x + y

# Additional calculator functions to show extensibility

@mcp.tool()

def subtract_numbers(x: float, y: float) -> float:

"""Subtract the second number from the first number."""

print(f"📝 Calculating: {x} - {y}")

return x - y

@mcp.tool()

def multiply_numbers(x: float, y: float) -> float:

"""Multiply two numbers together."""

print(f"📝 Calculating: {x} * {y}")

return x * y

@mcp.tool()

def divide_numbers(x: float, y: float) -> float:

"""Divide the first number by the second number."""

if y == 0:

error_msg = "Cannot divide by zero"

print(f"❌ Error: {error_msg}")

raise ValueError(error_msg)

print(f"📝 Calculating: {x} / {y}")

return x / y

if __name__ == "__main__":

print("┌───────────────────────────────────────┐")

print("│ MCP Calculator Server (SSE Mode) │")

print("└───────────────────────────────────────┘")

print("Server available at: http://localhost:8000")

print("Connect to this server from the MCP SSE client agent")

print("Run the client with: python 05_agent_with_mcp_sse.py")

print("Press Ctrl+C to exit")

# Run the MCP server with HTTP/SSE transport

mcp.run(transport="sse") # This starts an HTTP server with SSE transport

This code is very similar to our stdio server, but with one key difference: instead of calling mcp.run_stdio(), we call mcp.run(transport="sse") which starts an HTTP server that clients can connect to.

Now, let's create a Semantic Kernel agent that connects to this server:

#!/usr/bin/env python3

# Semantic Kernel agent with MCP SSE plugin integration

import asyncio

import os

from pathlib import Path

from dotenv import load_dotenv

from semantic_kernel import Kernel

from semantic_kernel.connectors.ai.open_ai import OpenAIChatCompletion

from semantic_kernel.connectors.ai.open_ai import OpenAIChatPromptExecutionSettings

from semantic_kernel.connectors.ai.function_choice_behavior import FunctionChoiceBehavior

from semantic_kernel.connectors.mcp import MCPSsePlugin

from semantic_kernel.contents import ChatHistory

from semantic_kernel.contents.streaming_chat_message_content import StreamingChatMessageContent

from semantic_kernel.contents.utils.author_role import AuthorRole

from semantic_kernel.functions import KernelArguments

async def main():

# Load environment variables from .env file

current_dir = Path(__file__).parent

env_path = current_dir / ".env"

load_dotenv(dotenv_path=env_path)

print("Setting up the Math Assistant with SSE-based MCP calculator...")

# Initialize the kernel

kernel = Kernel()

# Add an OpenAI service with function calling enabled

api_key = os.getenv("OPENAI_API_KEY")

if not api_key:

print("Error: OPENAI_API_KEY environment variable is not set.")

print("Please set it in your .env file or environment variables.")

return

model_id = "gpt-4o" # Use a model that supports function calling

service = OpenAIChatCompletion(ai_model_id=model_id, api_key=api_key)

kernel.add_service(service)

# Create the completion service request settings

settings = OpenAIChatPromptExecutionSettings()

settings.function_choice_behavior = FunctionChoiceBehavior.Auto()

# Configure and use the MCP plugin using SSE via async context manager

# Note: This assumes the SSE server is already running at this URL

async with MCPSsePlugin(

name="CalcServerSSE",

url="http://localhost:8000/sse" # URL where the MCP SSE server is listening

) as mcp_plugin:

# Register the MCP plugin with the kernel after connecting

try:

kernel.add_plugin(mcp_plugin, plugin_name="calculator")

except Exception as e:

print(f"Error: Could not register the MCP plugin: {str(e)}")

print("\nMake sure the MCP SSE server is running at http://localhost:8000")

print("Run this command in another terminal: python 04_mcp_server_sse.py")

return

# Create a chat history with system instructions

history = ChatHistory()

history.add_system_message(

"You are a math assistant. Use the calculator tools when needed to solve math problems. "

"You have access to add_numbers, subtract_numbers, multiply_numbers, and divide_numbers functions."

)

# Define a simple chat function

chat_function = kernel.add_function(

plugin_name="chat",

function_name="respond",

prompt="{{$chat_history}}"

)

print("\n┌────────────────────────────────────────────┐")

print("│ Math Assistant ready with SSE Calculator │")

print("└────────────────────────────────────────────┘")

print("Type 'exit' to end the conversation.")

print("\nExample questions:")

print("- What is the sum of 3 and 5?")

print("- Can you multiply 6 by 7?")

print("- If I have 20 apples and give away 8, how many do I have left?")

while True:

user_input = input("\nUser:> ")

if user_input.lower() == "exit":

break

# Add the user message to history

history.add_user_message(user_input)

# Prepare arguments with history and settings

arguments = KernelArguments(

chat_history=history,

settings=settings

)

try:

# Stream the response

print("Assistant:> ", end="", flush=True)

response_chunks = []

async for message in kernel.invoke_stream(

chat_function,

arguments=arguments

):

chunk = message[0]

if isinstance(chunk, StreamingChatMessageContent) and chunk.role == AuthorRole.ASSISTANT:

print(str(chunk), end="", flush=True)

response_chunks.append(chunk)

print() # New line after response

# Add the full response to history

full_response = "".join(str(chunk) for chunk in response_chunks)

history.add_assistant_message(full_response)

except Exception as e:

print(f"\nError: {str(e)}")

print("Make sure the MCP SSE server is running at http://localhost:8000")

if __name__ == "__main__":

try:

asyncio.run(main())

except KeyboardInterrupt:

print("\nExiting the Math Assistant. Goodbye!")

except ConnectionRefusedError:

print("\nError: Could not connect to the MCP SSE server.")

print("Make sure the server is running: python 04_mcp_server_sse.py")

except Exception as e:

print(f"\nError: {str(e)}")

print("The application has encountered a problem and needs to close.")

The key difference here is that instead of using MCPStdioPlugin, we're using MCPSsePlugin with the /sse endpoint URL and wrapping it in an async context manager:

async with MCPSsePlugin(

name="CalcServerSSE",

url="http://localhost:8000/sse"

) as mcp_plugin:

# Use the plugin within this context

This pattern ensures proper connection setup and cleanup.

To use this approach:

- Start the MCP server in one terminal with

python 04_mcp_server_sse.py - Then run the agent in another terminal with

python 05_agent_with_mcp_sse.py

This SSE approach offers several advantages:

- The server can run independently of the agent

- Multiple agents can connect to the same server

- The server can be hosted on a different machine or in the cloud

- The agent can reconnect if it loses connection

Next Steps and Extensions

We've seen how to build a simple SK agent and extend it with MCP plugins for extra capabilities. This is a powerful pattern – even with our toy example, we effectively gave the LLM a new "ability" (math operations) by writing a few lines of Python and using MCP to integrate it. In real-world scenarios, the possibilities are vast. Here are some possible next steps or extensions you might consider:

Add more plugins and tools

You can expand the MCP server with additional tools (just add more functions with @mcp.tool). These could interface with databases, call external APIs, or perform computations. Your agent will then be able to handle a wider range of queries by delegating to the appropriate tool.

Similarly, you could attach multiple plugins to the SK agent – e.g., an MCP plugin for database access, another plugin for some OpenAPI-defined service, and maybe some in-process plugins for quick tasks. SK's agent framework is designed to handle multi-tool scenarios gracefully.

Integrate with enterprise systems

Using the plugin approach, you can connect AI agents to enterprise data and services. For instance, one could create an MCP server that exposes tools for an internal CRM or ERP system, enabling the AI agent to fetch business data or trigger workflows on behalf of a user (with the proper permissions and validations in place).

Because SK supports filters and policies, you can enforce checks – e.g. an SK filter can vet the input/output of a tool call to ensure compliance or safety. Logging and telemetry from SK can be piped into your monitoring systems for audit trails when the agent calls sensitive functions.

Enhance the conversation flow

The current agent simply answers a single question. SK provides more advanced constructs (like the AgentChat or planners) to handle multi-turn conversations and even orchestrate multiple agents working together. You could have one agent call an MCP plugin, then pass results to another agent, etc., to tackle complex tasks.

Combining plugins with SK's planning capabilities allows the AI to break down user requests and use the right tool in sequence (this is analogous to tools usage in AI chain-of-thought).

Experiment with other transports or cross-language tools

MCP is not limited to Python. You could write an MCP server in C# or Java (there are SDKs for those) and still connect it to a Python SK agent (or vice versa). This means if a certain library or capability is only available in another language, you can still expose it to your agent through MCP.

The interoperability provided by MCP was one of the design goals – e.g., reuse existing SK plugins as MCP tools across different platforms. This can future-proof your solution: if down the line another AI framework adopts MCP, your tools could be reused there as well.

Security and scaling considerations

As your agent's capabilities grow, consider how to secure each tool. MCP supports authentication mechanisms (you wouldn't want an open endpoint that anyone can call). You might integrate an authentication plugin or API key requirement in your MCP server.

Also, think about scaling – an SSE-based server could be load-balanced or run in a container. Semantic Kernel's design (decoupling the agent and tools) makes it feasible to scale the tool side independently if needed (for example, many agent instances could connect to one robust MCP server farm providing a heavy service like database queries).

Working Code Examples

All the code shown in this article is available in the code directory. To run these examples:

-

Install the required packages:

cd code

pip install -r requirements.txt -

Create a

.envfile with your OpenAI API key:OPENAI_API_KEY=your-key-here -

Run the examples:

- Basic agent:

python 01_basic_agent.py - MCP stdio agent:

python 03_agent_with_mcp_stdio.py - MCP SSE server:

python 04_mcp_server_sse.py(in one terminal) - MCP SSE agent:

python 05_agent_with_mcp_sse.py(in another terminal)

- Basic agent:

Try asking the math assistant questions like:

- "What is the sum of 3 and 5?"

- "Can you multiply 6 by 7?"

- "If I have 20 apples and give away 8, how many do I have left?"

- "What is 15 divided by 3?"

By combining Semantic Kernel's flexible agent framework with the Model Context Protocol, developers can create powerful AI agents that bridge the gap between natural language and real-world actions/data. We've only scratched the surface with a simple example. In practice, you could enable your AI agents to do things like analyze documents, interact with cloud services, or execute custom business logic – all through plugins.

As Microsoft's SK and the MCP standard continue to evolve, we can expect even more seamless integration of AI into software development workflows, enabling the creation of sophisticated AI-driven applications.